Aquila-CFD

Aquila-CFD is our core post-processing infrastructure at the HPCVL. It is capable of targeting CPUs, GPUs, and other software accelerators within a single node and across hundreds or thousands of nodes. Aquila currently targets TensorFlow as its performance portability layer enabling cross-vender and cross-platform performance portability.

High-performance for CFD Big Data

Distributed Memory

Aquila currently targets MPI as its main distributed memory abstraction. As such, it is transparently deployable to the vast majority of high-performance computing clusters available to practitioners.

Scalable, out-of-core computation

Given the ever-growing demand for larger domains in CFD and higher temporal resolutions, Aquila is designed to operate on a per-flow field basis. It keeps intermediate structures in memory but implements a highly efficient, asynchronous pre-fetcher to give the “illusion” of an in-memory dataset.

Pluggable File I/O

We have abstracted the core pipeline from the I/O (both input and output). This enables a highly modular interface capable of supporting drastically different file formats as needed. The only requirement is the implementation of a “reader” or a “writer”.

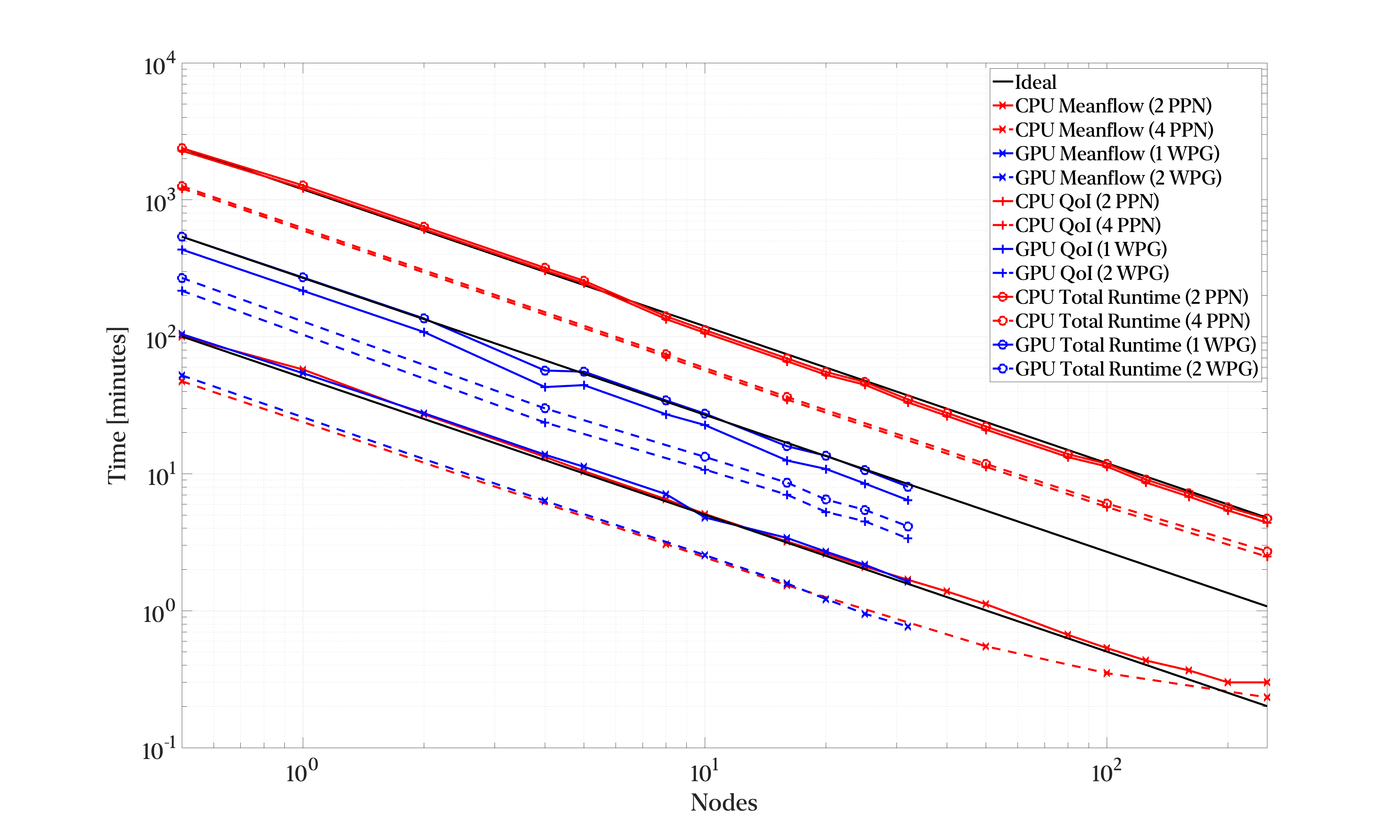

Figure: Strong scaling perfomance for a low Re, incompressible turbulent boundary layer over a flat plate.

The study post-processed 40,000 flow fields (approximately 3.38 TB). We explored multiple processes-per-node (PPN) on CPU-only nodes (the remaining cores were populated with threads) and multiple workers per GPU (WPG) on GPU nodes. The study was designed to evaluate the impact of GPU oversubscription while keeping the number of independent processes comparable between CPUs and GPUs.

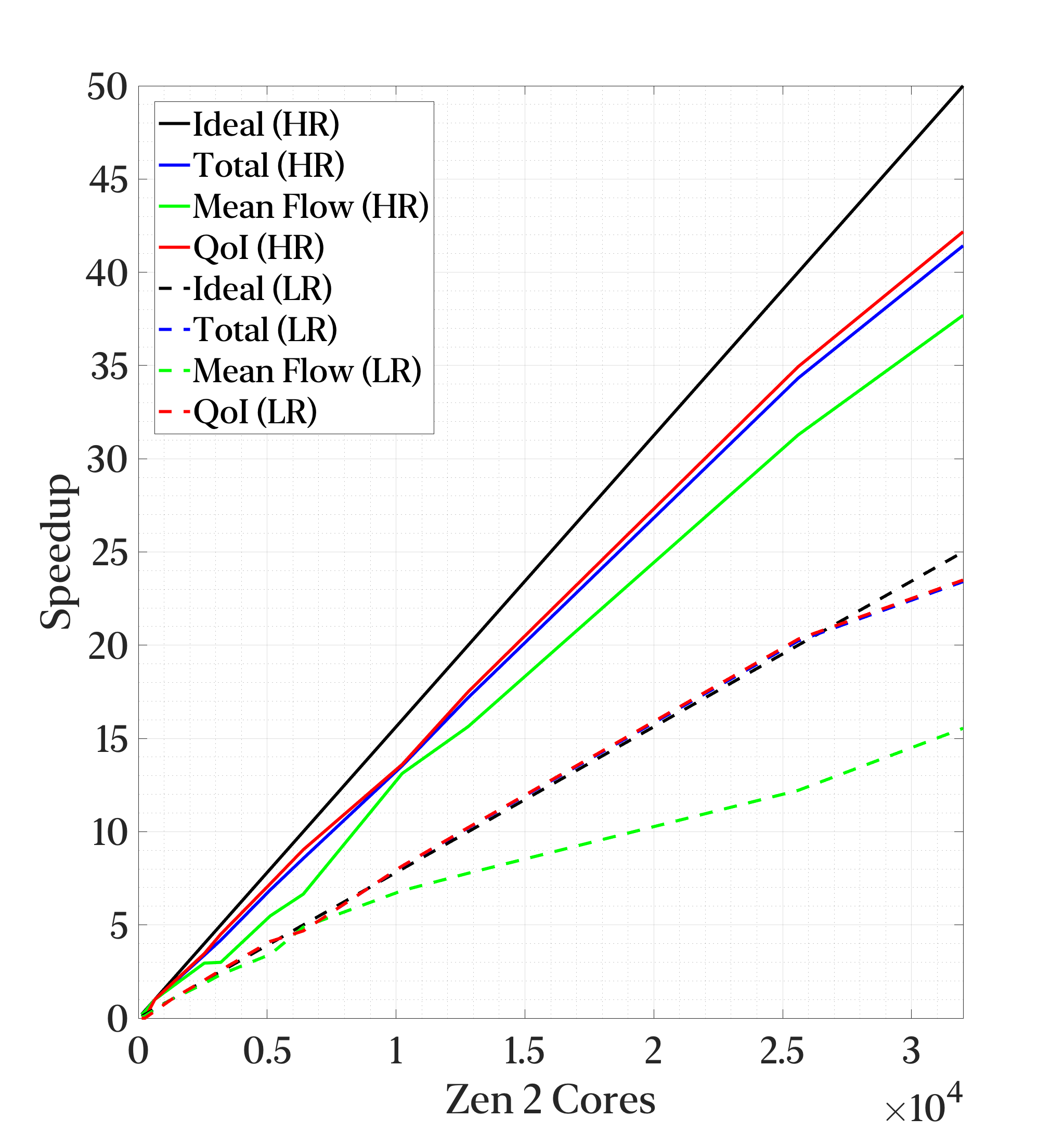

Figure: Speed-up results for two strong scaling performance studies across 40,000 flow fields for a Low Reynolds (LR) Turbulent Boundary Layer and 4,000 flow fields of a moderately High Reynolds (HR) Turbulent Boundary Layer.

Regardless of data set size, the main conclusion for out-of-core post-processing is “more work = more efficiency”. The mean flow only requires one multiply-accumulate operation (2 FLOPs) per-flow field which quickly becomes bottlenecked by I/O. Adding more operations which is the essence of the core post-processing pipeline leads to improved scaling performance.

Gaining insight by visualization

Two-Point Correlations

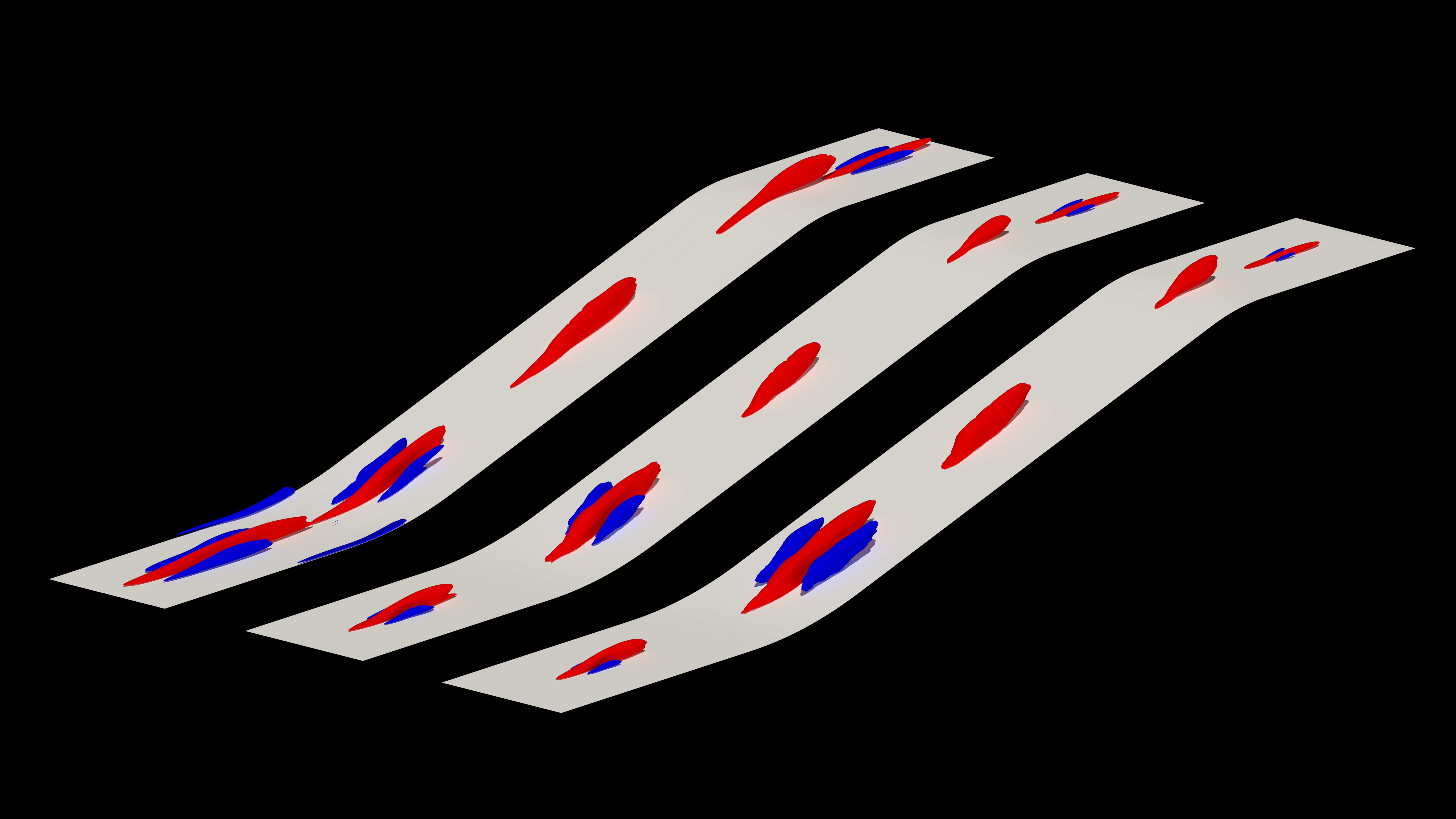

Visualizing regions in a turbulent boundary layer exhibiting coherency can shed light on many energy and momentum transfer phenomena. Although a myriad of very complex techniques exist to visualize, study and quantify regions of coherency, the two-point correlation (TPC) is a simple, yet powerful, technique for visualizing regions of coherent flow parcels. Aquila is capable of producing high-quality, volumetric TPCs in a highly scalable fashion. These can be visualized on any visualization platform or stored in highly-efficient formats for archival. Aquila leverages the convolution theorem to calculate TPCs in the Fourier space and is capable of exploiting homogeneity across a given dimension to provide 2-4x improvements in memory usage/performance. This being said, calculating the two-point correlation across a full, 3D domain is computationally expensive and requires an efficient implementation.

Figure: Two-Point Correlation for 3 Supersonic (Mach 2.86) TPCs in the buffer region (15 wall units from the wall) with three wall conditions cooling, adiabatic, and heating walls (from left to right, respectively).

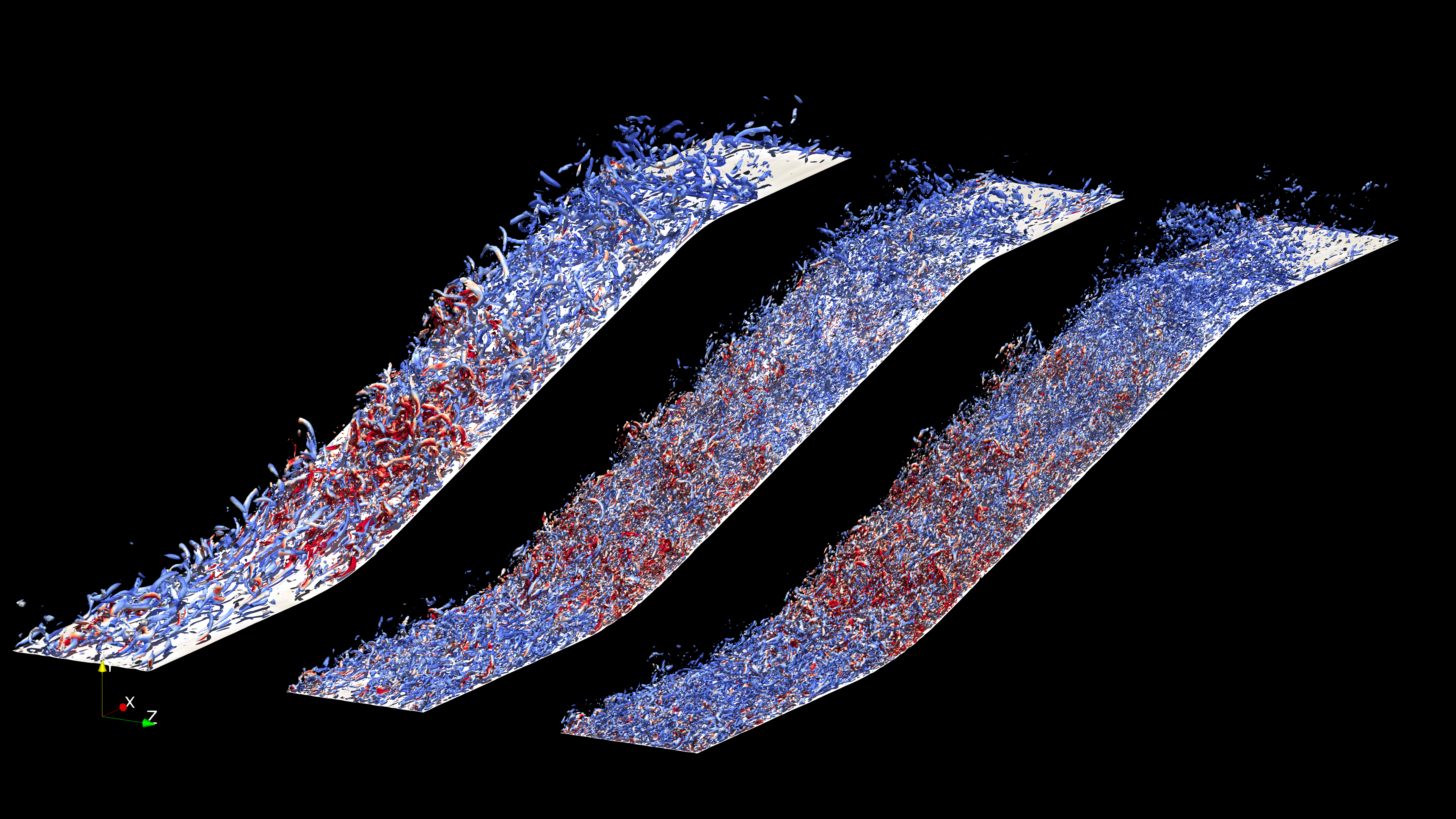

Figure: Q-Criterion colored by the Turbulent Kinetic Energy with three wall conditions cooling, adiabatic, and heating walls (from left to right, respectively).

Instantaneous Flow Parameters

Understanding complex turbulent effects requires visualizing instantaneous and mean flow parameters to gain a complete picture of non-localized temporal dependencies and instantaneous effects. One such instantaneous visualization technique is the Q-Criterion for the Eulerian identification of vortex cores. However, the calculation of the Q-Criterion generates a large volume of data if the input volume of data is itself significant. Thus, an efficient, asynchronous mechanism is required to ensure the remainder of the post-processing pipeline is not blocked by the I/O operations needed to write the Q-Criterion for every time step. Aquila leverages the same pool of threads it uses for out-of-core asynchronous data pre-fetching to schedule any instantaneous visualization operations.